The combination of AWS and Terraform for deploying infrastructure is one of the most common patterns that we see clients use. Of course, some people will choose to use Azure and Terraform, but the common denominator here is Terraform.

Most clients also use GitHub to store their code and configurations for their infrastructure and applications. The final piece to this puzzle is the pipeline used to build, test, run, and deploy your code.

Since Microsoft acquired GitHub, they are aggressively working towards becoming a one stop shop for everything from storing your code through to deploying code to your production environments. It would make sense, then, to use these three technologies (AWS, Terraform, and GitHub) for everything that you need.

As a side note - if you are already using tooling that your engineers are experts in, then we’re not advocating that you drop those in favour of these specific tools - after all, it’s the process rather than the tooling that’s important. However, these three tools together create quite a compelling trifecta, especially if you’re just starting out or trying to amalgamate a set of disparate tooling into a standardised set.

In this post, we’ll detail a method that you can adopt to bootstrap your Terraform code ready to deploy into AWS, work on the code within GitHub, run testing using Github Actions, and then finally we’ll look at deployment and pushing changes.

There are a number of ways to approach this, and there are of course far more complex scenarios that we both deploy and support as part of our client work. This simplified approach, however, is possibly the golden standard for those just starting out in this space, or who are looking to manage their code and deployments in a simple and manageable way.

Let’s Presume A Thing or Two

There are so many resources out there to learn AWS, Terraform, and GitHub, that range from the very basic to the very advanced, that we’re not going to cover them here. We’ll explain everything that we do, however there are a few pre-requisites and presumptions that we’re going to make:

- You have a GitHub account

- You’re happy to create a repo to hold the code we’re going through

- You know what Pull Requests and Merges are

- You have an AWS account (a Free Tier account is perfectly fine to run through this solution)

- A basic working knowledge of the fundamentals of Terraform

- You have credentials to your AWS account stored in

~/.aws/credentialsthat have the appropriate permissions to deploy S3 and DynasmoDB resources

- You have credentials to your AWS account stored in

As I said, we’ll walk through the steps and so you should be able to follow along and understand what’s happening here without too much difficulty.

Taking the first step in integrating AWS and Terraform

This is an issue that I’ve heard countless times - “I want to manage all of my infrastructure and applications within Terraform, however before I can do that I need to create AWS resources to hold the remote state. Which means that the remote state isn’t defined from within Terraform….which means that I’m not managing all of my resources from within Terraform….”. The question raises an interesting chicken and egg scenario, and it’s very easy to go around in circles and come up with more and more complex solutions in order to solve this issue.

What we’re going to do here, is to step through a simple procedure to bring your remote state under Terraform management without doing anything too complex. We’ll also walk you through the code, and provide templates for you to take advantage of this solution yourself.

In a nutshell, what we’ll accomplish in this sections is the following:

- Initialise and clone a GitHub repo

- Add some code to the repo

- Configure authentication between GitHub and AWS

- Create the resources required for the Terraform Remote State Backend

- Import the state into the remote backend so nothing is stored locally

Creating Our Repo and Directory Structure

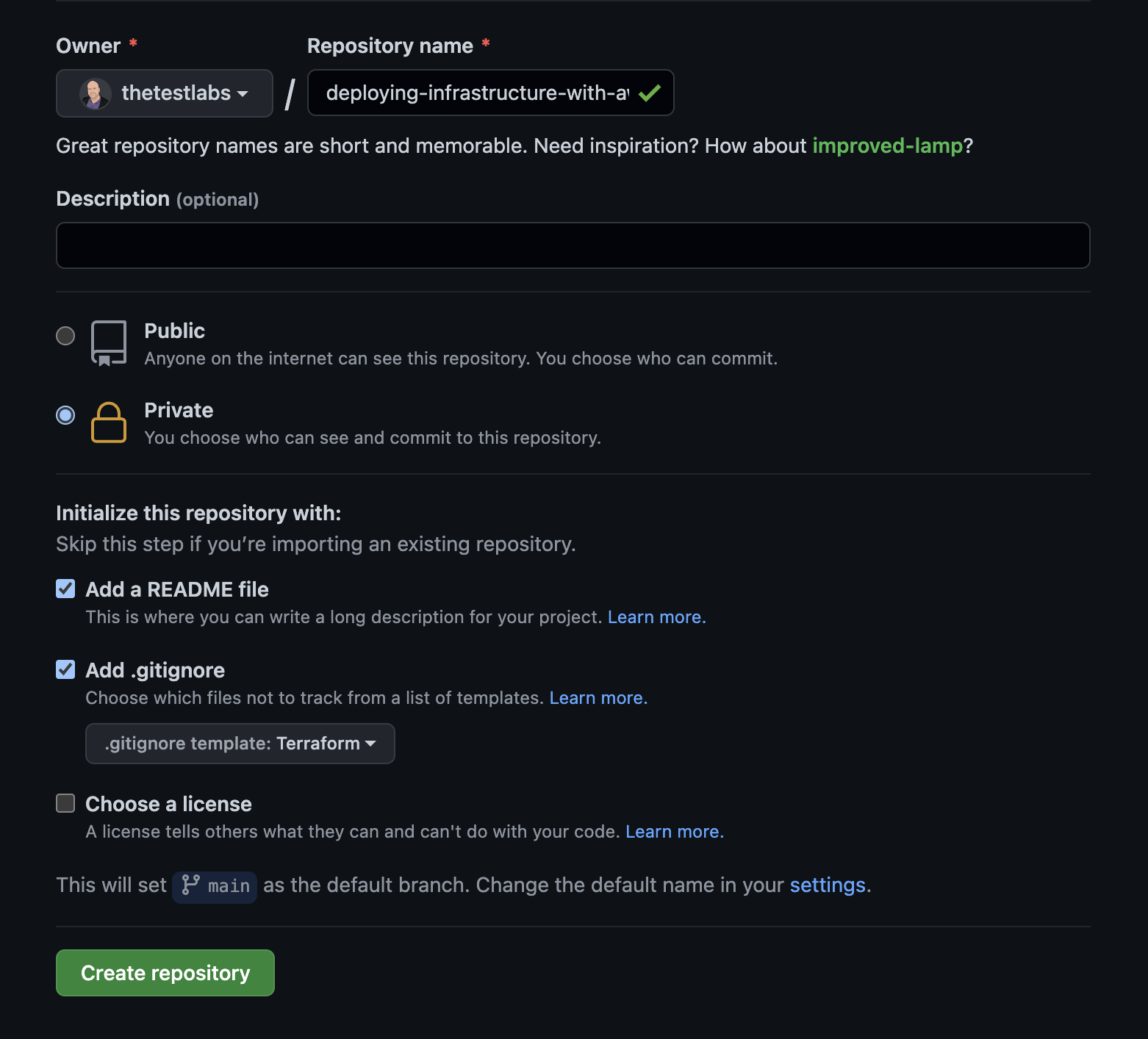

First thing’s first, we need somewhere to store our code! Head over to your GitHub account and create a new repository:

The next step is to add the remaining files that we will need, so that what we should end up with in our repository is a very simple file structure that looks like this:

git_directory

| .gitignore

| README.md

| datasources.tf

| github_federation.tf

| locals.tf

| security_group.tf

| terraform.tf

| tf_remote_state.tf

| variables.tf

| vpc.tf

Initial Terraform Configuration

There are a couple of bits and pieces that we need to do in order to make Terraform work, such as defining our providers. We should also probably grab some information from the account that we will use a little bit later as references.

Let’s start with poulating the relevant files shown below with the code shown below.

datasources.tf

data "aws_caller_identity" "current" {}

data "aws_region" "current" {}

locals.tf

locals {

common_tags = {

Demo = true

Terraform = true

}

}

terraform.tf

# Terraform Block

terraform {

required_version = "~> 1.0.11"

required_providers {

aws = {

source = "hashicorp/aws"

version = ">= 3.68.0"

}

}

}

# Configure the AWS Provider

provider "aws" {

region = "eu-west-1"

}

The Basic Resources

Now that’s configured, let’s start adding some resources. For the remote state, we will need an S3 Bucket to store the state files, and a DynamoDB table to handle locking so that multiple people can’t update the state simultaneously.

There are a couple of ways we could do this, including going through the process manually - but why on earth would we be doing that if we can just write a few lines of code? Plus, as with most things in Terraform, there are modules for that, so there’s even less code to write!

Firstly, populate the remote_state.tf file with the following code:

# Terraform Remote State

#Create S3 Bucket

resource "aws_s3_bucket" "terraform_state_bucket" {

bucket = "${var.remote_state_prefix}-${data.aws_caller_identity.current.account_id}"

server_side_encryption_configuration {

rule {

apply_server_side_encryption_by_default {

sse_algorithm = "AES256"

}

}

}

lifecycle {

prevent_destroy = true

}

versioning {

enabled = true

}

tags = local.common_tags

}

The above code does the following:

- Creates an S3 Bucket

- Enables Server Side Encryption

- Enables Versioning

Next, we’ll add the DynamoDB table for locking. Open up the remote_state.tf file again, and add the following between the S3 Bucket configuration and tags = local.common_tags.

# DynamoDB Table

resource "aws_dynamodb_table" "terraform_state_lock" {

name = "${var.remote_state_prefix}-${data.aws_caller_identity.current.account_id}"

billing_mode = "PAY_PER_REQUEST"

hash_key = "LockID"

attribute {

name = "LockID"

type = "S"

}

tags = local.common_tags

}

The code we’ve just added will deploy the following infrastructure:

- DynamoDB table

- Set the billing mode to per request, as this will be the cheapest way to manage the state here

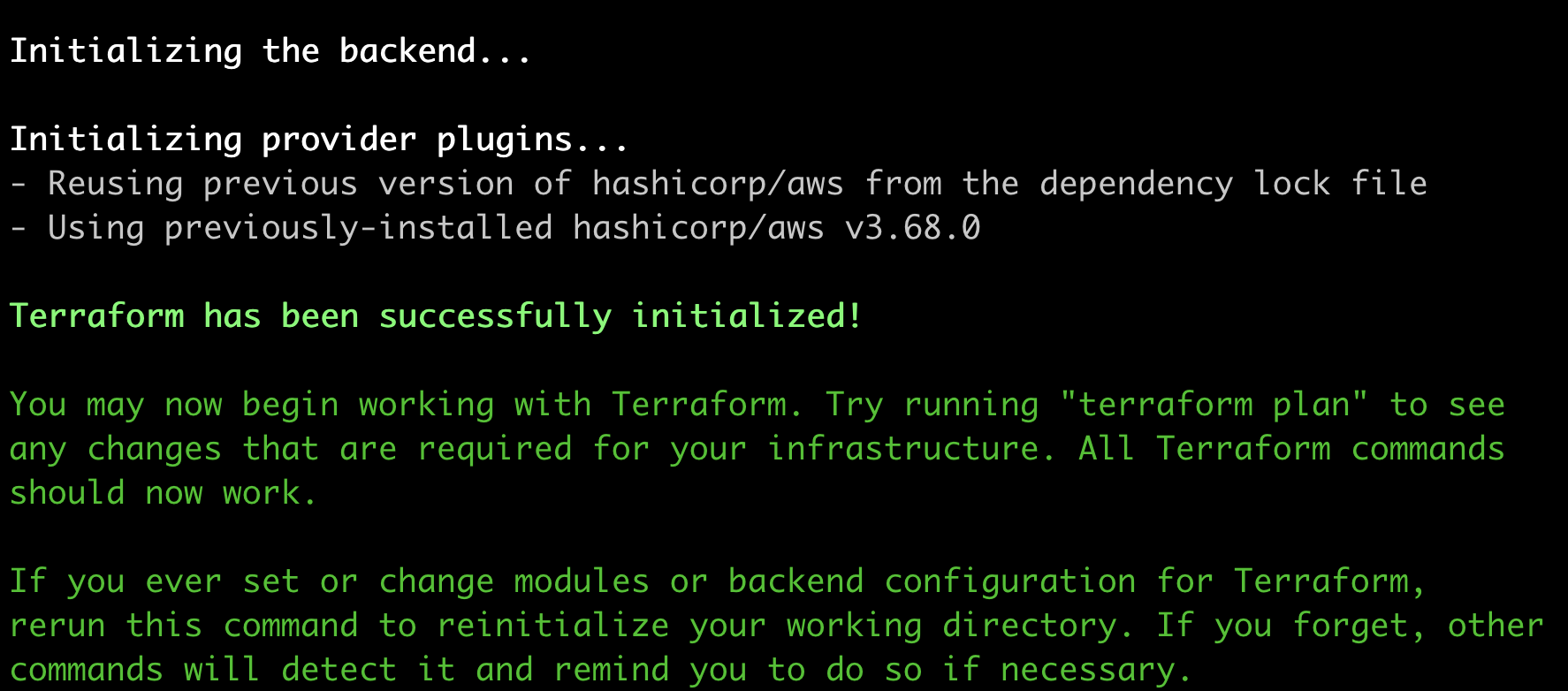

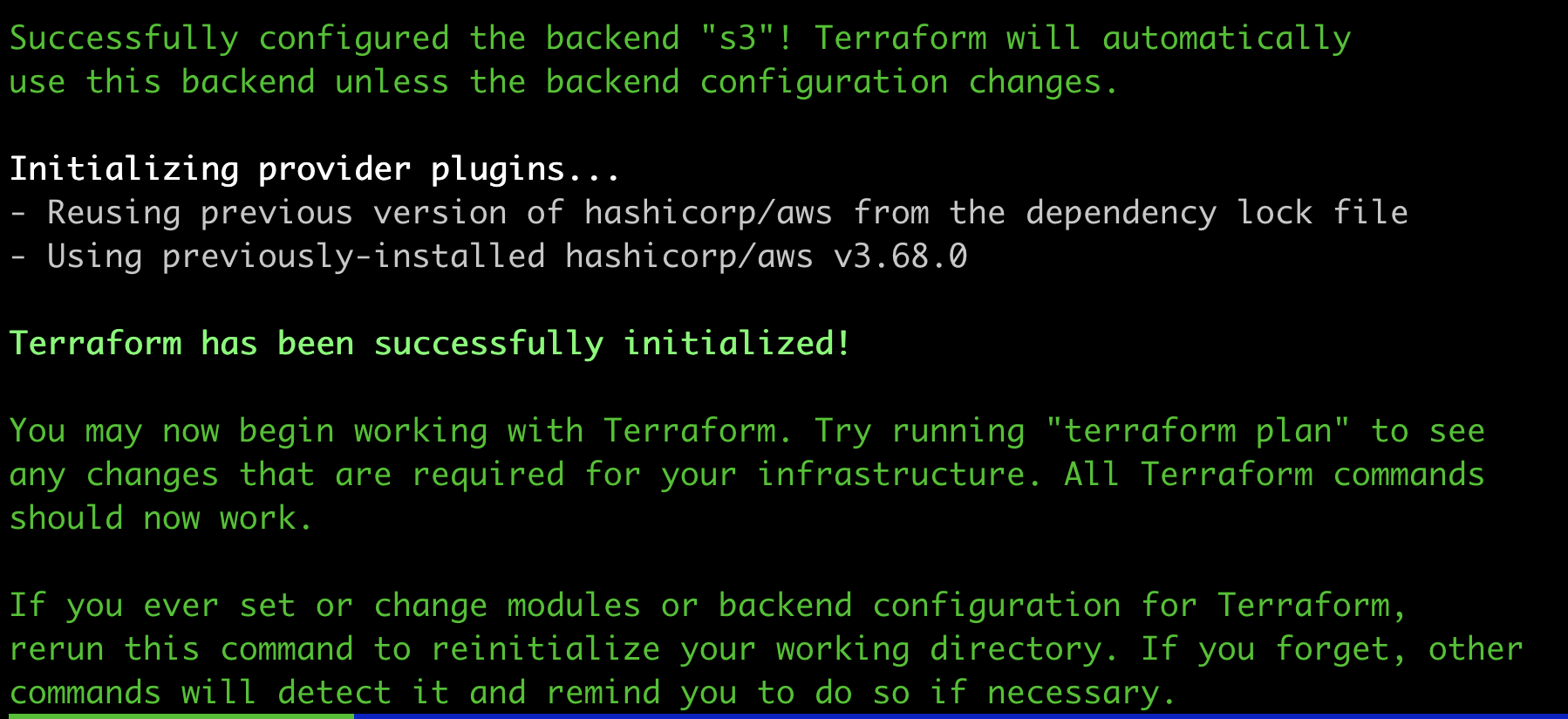

Let’s now run terraform init to make sure there are no errors in intialising Terraform, then we can run terraform plan and, all being good, terraform apply.

Running terraform init should give you something that looks like this when you initialise the directory:

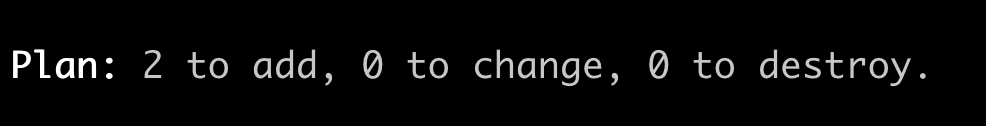

And then when you run terraform plan, there will be an output showing the two resources and their attributes. It’s good practice to go through this list and check exactly what will be deployed, however essentially the only key thing that you’re looking for here is that it will deploy the two resources that we have defined:

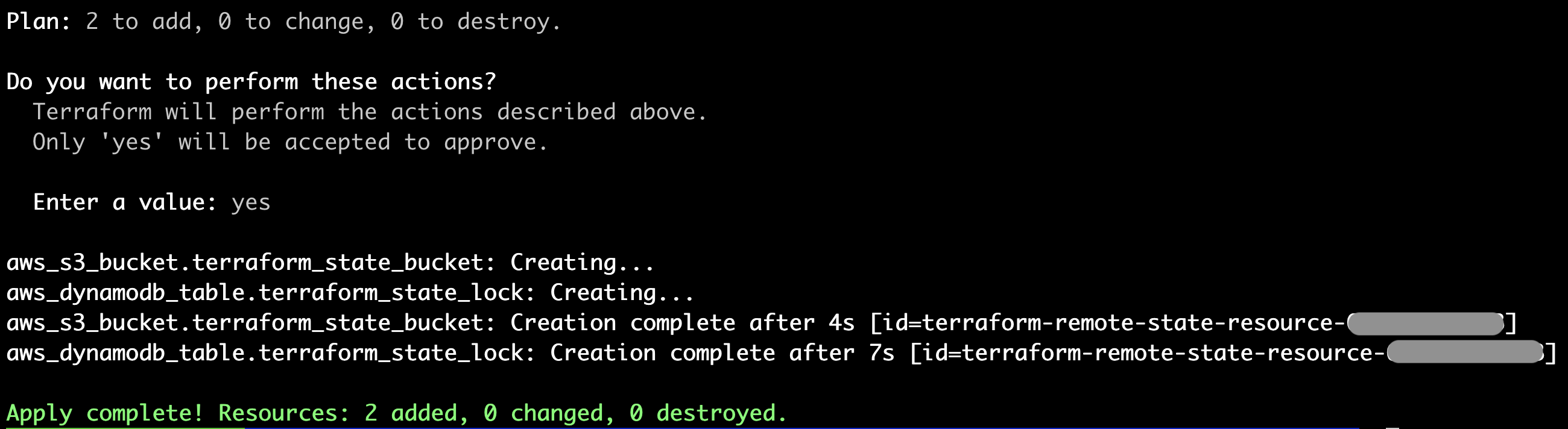

Finally, running terraform apply should result in confirmation that the resources have been deployed:

Migrate the Terraform State to the Newly Created Remote Backend

Awesome, we now have resources deployed into AWS! However, we still need to import them into our Terraform State, and to store that state in the remote backend that we’ve just created.

The Terraform documentation notes an important limitation when defining a backend configuration:

- A backend block cannot refer to named values (like input variables, locals, or data source attributes).

As a result of this, we will need to hardcode the values rather than reference them, so we will add the following to the terraform.tf file, making sure that you choose the correct region and replace the account number placeholder 1234567890 with your actual account number:

# Terraform Block

terraform {

required_version = "~> 1.0.11"

required_providers {

aws = {

source = "hashicorp/aws"

version = ">= 3.68.0"

}

}

backend "s3" {

bucket = "terraform-remote-state-resource-1234567890"

key = "terraform.tfstate"

region = "eu-west-1"

dynamodb_table = "terraform-remote-state-resource-1234567890"

encrypt = true

}

}

# Configure the AWS Provider

provider "aws" {

region = "eu-west-1"

}

Once this code is added, we can run terraform init again, which will identify the fact that a remote backend has been provisioned and ask if we want to copy over the local state to the new “S3” backend. The answer, of course, is yes!

Configure GitHub Authentication with AWS

Now we have our code in GitHub and have configured all of the pre-requisited along with a remote backend under terraform management, we probably want to deploy some stuff! To do this, we’re going to use GitHub Actions, and the first step here is to enable authentication between your GitHub repo and your AWS account.

In order to do this, we’re going to add some code to the github_federation.tf file to make the magic happen. This particular magic uses Open ID Connect, which allows you to assume an IAM role within your account to deploy services into AWS.

The complete code for github_federatoin.tf should be as follows (don’t worry, we’ll go through it and explain what we’re doing in a minute!):

resource "aws_iam_role" "GithubOidcRole" {

name = "GithubOidcRole"

managed_policy_arns = [

"arn:aws:iam::aws:policy/AmazonEC2FullAccess"

]

assume_role_policy = jsonencode({

"Version" : "2012-10-17",

"Statement" : {

"Sid" : "GithubOidcRole",

"Effect" : "Allow",

"Principal" : { "Federated" : "arn:aws:iam::641772128763:oidc-provider/token.actions.githubusercontent.com" },

"Action" : "sts:AssumeRoleWithWebIdentity",

"Condition" : { "StringLike" : { "token.actions.githubusercontent.com:sub" : "repo:thetestlabs/ttl-sandbox:*" } }

}

})

}

resource "aws_iam_openid_connect_provider" "github" {

url = "https://token.actions.githubusercontent.com"

client_id_list = [

"https://github.com/thetestlabs",

]

thumbprint_list = [

"a031c46782e6e6c662c2c87c76da9aa62ccabd8e"

]

}

So we’re basically doing three things here:

- Creating an IAM role called “ExampleGithubOidcRole”

- Attaching the AWS Managed policy “AmazonEC2FullAccess” to the role

- Allowing access from a specific GitHub account and a specific repo. In this case:

- Account: thetestlabs

- Repo: deploying-infrastructure-with-aws-terraform-and-github-actions

REMEMBER TO CHANGE AWS ACCOUNT, GH ACCOUNT, REPO, ETC

- Add an identity provider, specifically

token.actions.githubusercontent.com.

Be very careful about the policy that you attach to the role, since you will want to reduce the blast radius of deployments, especially terraform destroy operations.

Writing our GitHub Action

GitHub Actions are incredibly extensible, and with some patience and planning you should be able to accomplish the majority of CI and CD requirements you have.

For this example, we are going to set up two workflows:

- When code is pushed to a feature branch

- Code linting takes place to ensure that the code conforms to formatting guidelines

- The code is validated to ensure that we are deploying valid resources

- A plan is generated so that we can ensure that what will be deployed is what will eventually be deployed into out account

- When code is merged into the

mainbranch- All of the above checks are run

terraform applywill be the final command, deploying [services] into the account

- A note here on how that’s actually not a good idea in prod environments as there needs to be at least some gating in place

Workflows are stored in the following location, so go ahead and create the relevant directories and files:

git_directory

| .gitignore

| README.md

| datasources.tf

| github_federation.tf

| locals.tf

| security_group.tf

| terraform.tf

| tf_remote_state.tf

| variables.tf

| vpc.tf

|

└─── .github

│ │

│ └─── workflows

|

└─── feature_push.yml

└─── main_merge.yml

The contents of feature_push should be as follows:

name: Terraform

on:

push:

branches:

- main

- 'feature**'

pull_request:

branches:

- main

jobs:

build:

name: Run Terraform Steps

runs-on: ubuntu-latest

permissions:

id-token: write

contents: read

steps:

# Checkout the repository to the GitHub Actions runner

- name: Checkout

uses: actions/checkout@v2

# Install the latest version of Terraform CLI and configure the Terraform CLI configuration file with a Terraform Cloud user API token

- name: Setup Terraform

uses: hashicorp/setup-terraform@v1

with:

cli_config_credentials_token: ${{ secrets.TF_API_TOKEN }}

# Initialize a new or existing Terraform working directory by creating initial files, loading any remote state, downloading modules, etc.

- name: Terraform Init

# working-directory: ./terraform # ${working_directory}

run: |

# Export credentials

export AWS_ROLE_ARN=arn:aws:iam::546876102050:role/GithubOidcRole

export AWS_WEB_IDENTITY_TOKEN_FILE=/tmp/awscreds

export AWS_DEFAULT_REGION=eu-central-1

echo AWS_WEB_IDENTITY_TOKEN_FILE=$AWS_WEB_IDENTITY_TOKEN_FILE >> $GITHUB_ENV

echo AWS_ROLE_ARN=$AWS_ROLE_ARN >> $GITHUB_ENV

echo AWS_DEFAULT_REGION=$AWS_DEFAULT_REGION >> $GITHUB_ENV

curl -H "Authorization: bearer $ACTIONS_ID_TOKEN_REQUEST_TOKEN" "$ACTIONS_ID_TOKEN_REQUEST_URL" | jq -r '.value' > $AWS_WEB_IDENTITY_TOKEN_FILE

working_directory=$( jq -r '.[] | .terraform.working_directory' .github/workflows/_config.json )

cd ${working_directory}

init=$( jq -r '.[] | .terraform.enabled' ../.github/workflows/_config.json )

if [ "$init" = true ] ; then

terraform init

fi

# Checks that all Terraform configuration files adhere to a canonical format

- name: Terraform Format

run: |

working_directory=$( jq -r '.[] | .terraform.working_directory' .github/workflows/_config.json )

cd ${working_directory}

fmt=$( jq -r '.[] | .terraform.fmt' ../.github/workflows/_config.json )

if [ "$fmt" = true ] ; then

terraform fmt

fi

# Checks that all Terraform configuration files are valid

- name: Terraform Validate

run: |

working_directory=$( jq -r '.[] | .terraform.working_directory' .github/workflows/_config.json )

cd ${working_directory}

validate=$( jq -r '.[] | .terraform.validate' ../.github/workflows/_config.json )

if [ "$validate" = true ] ; then

terraform validate

fi

# Generates an execution plan for Terraform

- name: Terraform Plan

run: |

# Export credentials

export AWS_ROLE_ARN=arn:aws:iam::546876102050:role/GithubOidcRole

export AWS_WEB_IDENTITY_TOKEN_FILE=/tmp/awscreds

export AWS_DEFAULT_REGION=eu-central-1

echo AWS_WEB_IDENTITY_TOKEN_FILE=$AWS_WEB_IDENTITY_TOKEN_FILE >> $GITHUB_ENV

echo AWS_ROLE_ARN=$AWS_ROLE_ARN >> $GITHUB_ENV

echo AWS_DEFAULT_REGION=$AWS_DEFAULT_REGION >> $GITHUB_ENV

curl -H "Authorization: bearer $ACTIONS_ID_TOKEN_REQUEST_TOKEN" "$ACTIONS_ID_TOKEN_REQUEST_URL" | jq -r '.value' > $AWS_WEB_IDENTITY_TOKEN_FILE

working_directory=$( jq -r '.[] | .terraform.working_directory' .github/workflows/_config.json )

cd ${working_directory}

plan=$( jq -r '.[] | .terraform.plan' ../.github/workflows/_config.json )

if [ "$plan" = true ] ; then

terraform plan -lock=false

fi

# On push to main, build or change infrastructure according to Terraform configuration files

# Note: It is recommended to set up a required "strict" status check in your repository for "Terraform Cloud". See the documentation on "strict" required status checks for more information: https://help.github.com/en/github/administering-a-repository/types-of-required-status-checks

# - name: Terraform Apply

# working-directory: ./terraform

# env:

# AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

# AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

# if: github.ref == 'true' && github.event_name == 'push'

# run: terraform apply -auto-approve

# Checks that IF statement works

# - name: Go Variables

# # if: ${{ github.ref == 'true' }}

# if : ${{fromJson(terraform.enabled.true)}}

# run: go env

Testing our deployment

In order to test that we can successfully deploy into our AWS environment, we’re going to deploy a new VPC using the VPC module from the Terraform Registry.

In the vpc.tf file, add the following:

module "vpc" {

source = "terraform-aws-modules/vpc/aws"

name = "my-vpc"

cidr = "10.0.0.0/16"

azs = ["eu-west-1a", "eu-west-1b", "eu-west-1c"]

private_subnets = ["10.0.1.0/24", "10.0.2.0/24", "10.0.3.0/24"]

public_subnets = ["10.0.101.0/24", "10.0.102.0/24", "10.0.103.0/24"]

enable_nat_gateway = true

enable_vpn_gateway = true

tags = local.common_tags

}

Since we have set up our basic validation and linting procedures as well as our terraform plan operation to run when we push to a feature branch, let’s do that and make sure that everything passes as expected.